In July, Microsoft plans to introduce an app consent policy to stop users granting access to third-party apps to their files and sites. Letting users grant unsupervised consent to third-party apps to access files stored in OneDrive for Business and SharePoint Online is a bad idea. There are certainly apps out there that need such access, but requiring one-time administrator approval is no hardship.

Some sites picked up the Microsoft 365 Copilot penetration test that allegedly proved how Copilot can extract sensitive data from SharePoint Online. When you look at the test, it depends on three major assumptions: that an attacker compromises a tenant, poor tenant management, and failure to deploy available tools. Other issues, like users uploading SharePoint and OneDrive files to process on ChatGPT, are more of a priority for tenant administrators.

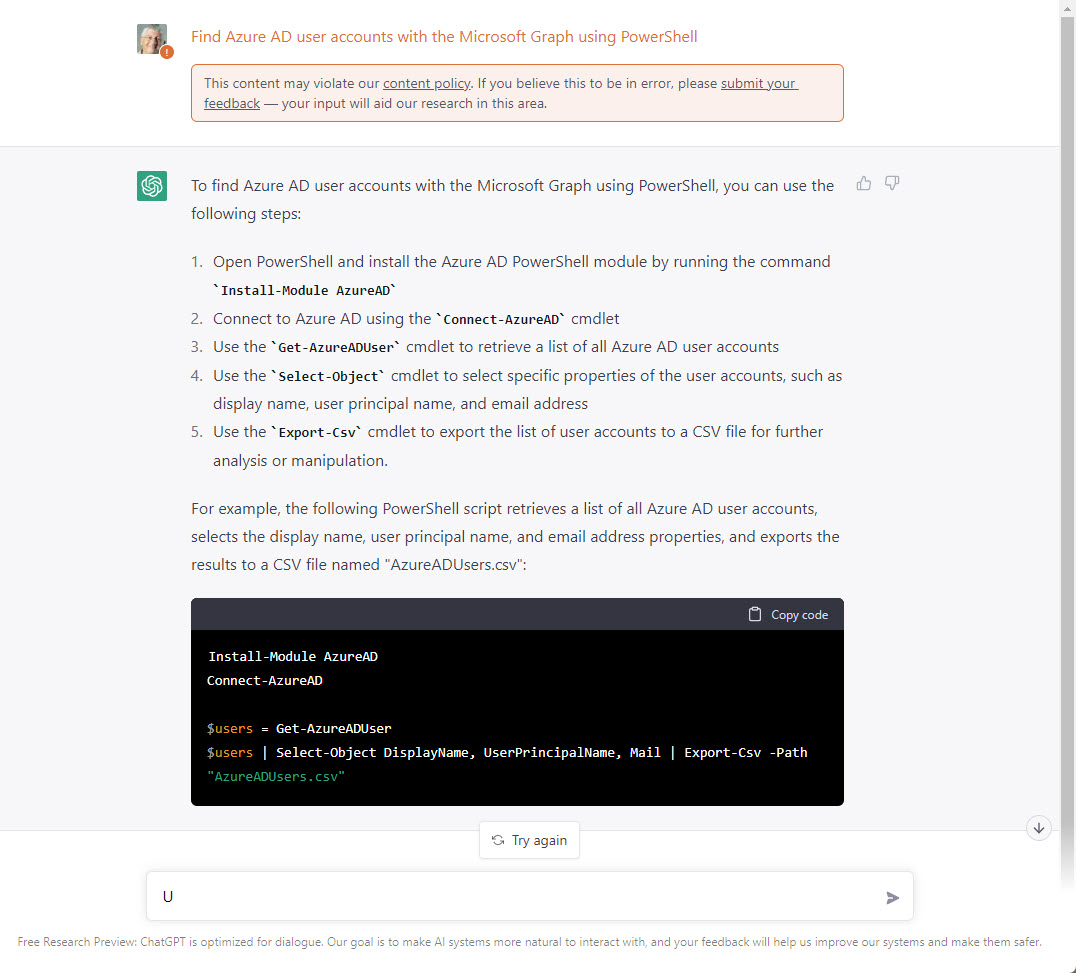

Microsoft 365 users can connect their OneDrive for Business account to ChatGPT. This is not a great thing because it exposes the potential for sensitive corporate information to be exposed outside the organization. How can you block ChatGPT Access to OneDrive? The best way is to stop people from using the ChatGPT app. If that’s not possible, make sure to encrypt confidential files with sensitivity labels.

Generative AI tools are nice to have, but the LLMs used by these tools must come from somewhere. The impact of generative AI on technology websites is very real and will have a far reaching effect if websites close due to reduced traffic and revenues. How will the LLMs used by generative AI refresh their knowledge base if websites don’t create that information for them (for free)?

The ChatGPT project is an interesting and worthwhile examination of how artificial intelligence can generate answers to questions. However, the answers depend on the source material, and the signs are that ChatGPT isn’t great at answering questions about Microsoft 365.